Physics content of the dataset

The proton-proton collisions taking place at the LHC can lead to the production and observation of many different processes predicted by the Standard Model (SM) of particle physics16,17,18. A brief summary of the SM particle content can be found in Refs. 19,20. The rate at which each of these processes occur can be calculated within the SM mathematical framework and then validated by the measurements performed by the experiments (summary of the CMS cross section measurements is available here). In this paper, we focus on events containing electrons (e) and muons (μ), light particles that, together with taus (τ) and their neutrino partners, form the three lepton families. In principle, we could have considered a dataset with no filter. While this would certainly be a more realistic representation of an unbiased L1T stream, generating such a dataset requires computing resources beyond our capabilities. Instead, we decided to use the lepton filter and make the dataset simulation tractable.

Within the limited size of a typical LHC detector, electrons and muons are stable particles, i.e., they do not decay in the detector and they are directly observed while crossing the detector material. On the contrary, τ leptons are much heavier, and hence much more unstable, than electrons and muons. They quickly decay into other stable particles. In a fraction of these decays, e and μ are produced. At the LHC, the most abundant source of high-energy leptons is the production of W and Z bosons21 which are among the heaviest SM particles with a mass of ~80 and ~90 proton masses, respectively. Once produced, they quickly decay into other particles. In a small fraction of cases, these particles are leptons. W and Z bosons are mainly produced directly in proton collisions. A sizable fraction of W bosons originate from the decay of top quarks (t) and anti-quarks ((bar{t})). The top quark being heavy and highly unstable, quickly decays into a W boson and a bottom quark, giving rise to signatures with only collimated sprays of hadrons called jets or with one e, μ, or τ, a neutrino and multiple jets. Leptons can originate from more rare W and Z production, such as from the decay of Higgs bosons or multi-boson production. Given the small production probability of these processes, we ignore them in this study.

As predicted by quantum chromodynamics (QCD)22, most of the LHC collisions result in the production of light quarks (up, down, charm, strange, and bottom) and gluons. As these quarks and gluons have a net colour charge and cannot exist freely due to colour-confinement, they are not directly observed. Instead, they come together to form colour-neutral hadrons, in a process called hadronisation that leads to jets. Sometimes, leptons can be produced inside jets, typically from the decay of unstable hadrons. Since QCD multijet production is by far the most abundant process occurring in LHC collisions, the production of leptons inside jets becomes relevant. Therefore, this contribution is sizable and taken into account.

Dataset

The processes listed above are the main contributors to an e or μ data stream, i.e., the set of collision events selected for including an e or μ with energy above a defined threshold. One of the datasets presented in this paper consists of the simulation of such a stream. In addition, benchmark examples of new lepton-production processes are given. These processes consist of the production of hypothetical, but still unobserved particles. They serve as examples of data anomalies that could be used to validate the performance of an AD algorithm. Details on these processes can be found in Refs. 9,10.

Moreover, we published a blackbox dataset containing a mixture of SM processes and a secret signal process. Events in this dataset are uniquely labeled by an event number, which allows us to link each event to the corresponding ground-truth dataset, containing a set of 0 (for SM events) and 1 (for new physics events) bits. The ground truth dataset is stored on a private cloud storage area at CERN. By not publishing this dataset, we can assure that the distributed blackbox is unlabeled for external developers. It is intended to be used to independently validate the performance of AD algorithms developed based on this dataset.

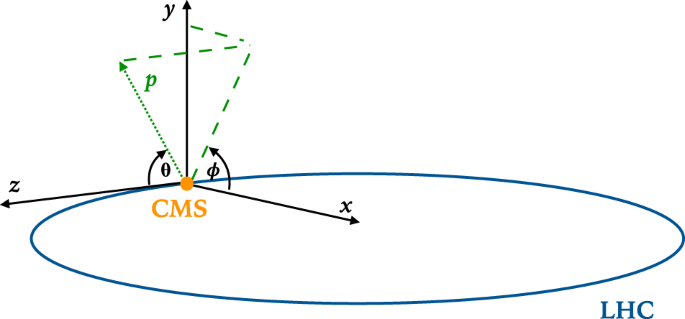

To describe the data, we use a right-handed Cartesian coordinate system with the z axis oriented along the beam axis, the x axis toward the center of the LHC, and the y axis oriented upward as shown in Fig. 2. The x and y axes define the transverse plane, while the z axis identifies the longitudinal direction. The azimuth angle ϕ is computed with respect to the x axis. Its value is given in radians, in the [−π, π] range. The polar angle θ is measured from the positive z axis and is used to compute the pseudorapidity (eta =-{rm{log }}({rm{tan }}(theta /2))). The transverse momentum (pT) is the projection of the particle momentum on the (x, y) plane. We use natural units such that (c=hbar =1) and we express energy in units of electronvolt (eV) and its prefix multipliers.

The reference system used to describe the momentum coordinates of the particles in the dataset.

Each event is represented by a list of four-momenta for high-level reconstructed objects: muons, electrons, and jets. In order to emulate the limited bandwidth of a typical L1T system, we consider only the first 4 muons, 4 electrons, and 10 jets in the event, selected after ordering the candidates by decreasing pT. If an event contains fewer particles, the event is zero-padded to preserve the size of the input, as is done in realistic L1T systems. Each particle is represented by its pT, η, and ϕ values. In addition, we consider the absolute value and ϕ coordinates of the missing transverse energy (MET), defined as the vector equal and opposite to the vectorial sum of the transverse momenta of all the reconstructed particles in the event.

Since the current trigger system was designed without having this application in mind, we assumed that one would have to keep the deployment as minimally intrusive as possible. This is why the event data format is built out of quantities available at the last stage of the trigger (having in mind the CMS design), under the assumption that any AI-based algorithm running on this information would be executed as the last step in the L1-trigger chain of computations.

Once generated, events are filtered using a custom selection algorithm, coded in Python. This filter requires a reconstructed electron or a muon to have pT > 23 GeV, within |η| < 3 and |η| < 2.1 respectively. For the events that pass the requirement, up to ten jets with pT > 15 GeV within |η| < 4 are included in the event, together with up to four muons with |η| < 2.1 and pT > 3 GeV, up to four electrons with |η| < 3 and pT > 3 GeV, and the missing transverse energy, as defined earlier. Given these requirements, the four SM processes listed below provide a realistic approximation of a L1T data stream. While the dataset has a L1-like format, the physics content is not that of an unbiased trigger (zero bias data), because of this single-lepton pre-filtering. As explained in Refs. 9,23, this cut was introduced for practical reasons, to make the dataset manageable given our limited computing resources.

The following SM processes are relevant to this study (charge conjugation is implicit):

-

Inclusive W boson production, where the W boson decays to a charged lepton (ℓ) and a neutrino (v), (59.2% of the dataset). The lepton could be a e, μ, or τ lepton.

-

Inclusive Z boson production, with (Zto ell ell (ell =e,mu ,tau )) (6.7% of the dataset),

-

QCD multijet production (33.8% of the dataset).

The relative contribution to the dataset listed in parenthesis take into account the production cross section (related to the probability of generating a certain process in an LHC collision) and the fraction of events accepted by the event selection described above. These four samples are mixed to form a realistic data stream populated by known SM processes (collectively referred to as background), and is provided in Ref. 24. An AD algorithm can thus be trained on this sample to learn the underlying structure of the background to identify a new physics signature (the signal) as an outlier in the distribution of the learned metrics.

To study the performance of AD algorithms, four signal datasets are provided:

-

A leptoquark (LQ) with an 80 GeV mass, decaying to a b quark and a τ lepton25,

-

A neutral scalar boson (A) with a 50 GeV mass, decaying to two off-shell Z bosons, each forced to decay to two leptons: A → 4ℓ26,

-

A scalar boson with a 60 GeV mass, decaying to two tau leptons: h0 → ττ27,

-

A charged scalar boson with a 60 GeV mass, decaying to a tau lepton and a neutrino: h± → τv28.

These samples are generated using the same code and workflow as the SM events.

In total, the SM cocktail dataset consists of 8,209,492 events, of which 4 million are used to define the training dataset24. The rest are mixed with events from the secret new physics process, to generate the blackbox dataset29. Together with the particle momenta, this dataset includes the event numbers needed to match each event to its ground-truth bit. The signal-benchmark samples in Refs. 25,26,27,28 amount to a 1,848,068 events in total. These data are available to test specific algorithms before running them on the blackbox.